Potential suppliers are asked to submit information about technology maturity and applications

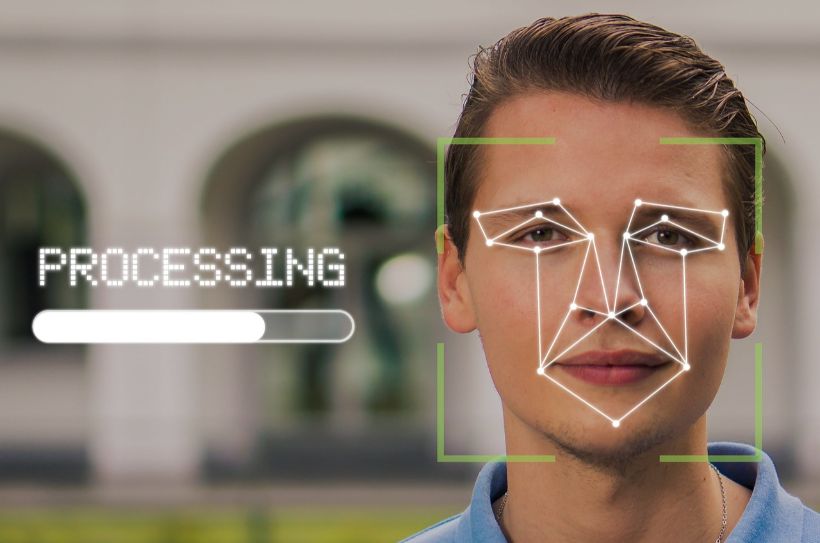

The Defence and Security Accelerator (Dasa) has launched a market exploration of facial recognition technologies that could be implemented by police forces and security agencies within the next 18 months. DASA is a Ministry of Defence unit that looks for and funds innovation.

Dasa is interested in innovative capabilities that can help identify people through facial features and landmarks “that support integration, algorithm development and analytics”. Potential suppliers are asked to submit information about their innovations and technology maturities, which will be shared with the Home Office by 12 October.

The MoJ unit says that technologies must be “secure, accurate, explainable and free from bias”, adding that it is not interested in systems that aim to detect physical or behavioural characteristics such as age or lie detection. It does not require costed proposals as the exercise is a request for information rather than a procurement competition.

Police use of facial recognition technology has raised controversy from campaigners who argue it undermines civil liberties. In 2020, the Court of Appeal found that South Wales Police’s use of the technology from 2017 was unlawful. Earlier this year, the force said it had carried out a series of trials and evaluations showing its use does not discriminate by gender, age or race, and it planned to resume use.

In July 2022, the Metropolitan Police deployed live facial recognition around Oxford Circus, leading to several arrests. The force publicised its use, and campaigners organised protests.

The Home Office recently signed a contract with IBM worth £54.7m for a biometric data platform that will include a service allowing law enforcement services across the UK to match facial image data.